Thank you for filling these out…Also, if you have a project you would like to share with Dean Brown and your teachers, please provide a link in the comments section below.

Thank you for filling these out…Also, if you have a project you would like to share with Dean Brown and your teachers, please provide a link in the comments section below.

In spite of numerous efforts to ensure educational equality, some groups of students achieve at a lower-levels than others. The first challenge in looking at the achievement gap, is narrowing down exactly what we mean by achievement gap. There are, in fact, a number of different achievement gaps. Students of color, students with disabilities, students from non-English speaking households, and students from low-income families demonstrate achievement gaps when compared with affluent white students. Additionally, disparities can be seen between male and female students throughout the average student’s academic career. There are a number of potential variables that could be the cause of the various gaps: underfunding of school districts, culturally non-responsive curriculums, lack of pre-school education, and home factors to name a few. All of this takes place against a shifting background of demographic changes and policy revisions at the federal, state, and local level.The achievement gap is truly a wicked problem.

This week I have been working hard with my colleagues to get our heads around this big, gangly issue. Throughout this process, it has been very hard to hold back judgment and ask open questions. I have an instinct to quickly analyze the problem and start brainstorming solutions. It actually takes a great deal of discipline to stay in the stage of asking ‘why’ questions. For me the greatest challenge of design thinking has been applying a beginner’s mindset to a field in which I have experience. When you see something every day, it can be hard to see it from a new perspective. I made this infographic to try to take a broad view of my understanding of the problems currently. In the last section, I ask the four questions my colleagues and I will be using as we go forward trying to solve this wicked problem.

Our wicked problem group is deep into our work solving the wicked problem of the achievement gap. We have established a clear, shared definition of the achievement gap. And we have looked at the many variables at work and the numerous solutions that have been tried in the past. We each made infographics to share our overview of the wicked problem and commented on one another’s work in hope of better understanding each other’s insights and perceptions.

Early in the week, we met and put together a set of “why” questions. In A More Beautiful Question, Warren Berger (2014) says that “why” questions are questions of “seeing and understanding” (p. 75). After spending some time generating these thinking and understanding questions we started determining some possible areas of attack for our wicked problem. But instead of just jumping in and come up with solutions, we put together a survey to distribute to teachers in our professional networks. Why? Because we might be missing something. We might be assuming things that aren’t true. We want our solution to be useful to the end-user. We want it to help them attack this problem in a way that is comfortable and useful for them. Most importantly, we don’t want to come up with a solution that has no basis in what the end-user (in this case the teacher) would actually use. So we put together a survey and are asking for feedback from anyone who teaches in a K-12 setting. We hope to use the data from this survey to craft a more responsive and relevant solution to the wicked problem of the achievement gap.

You can take the survey here:

While we know that wicked problems tend to be immune to perfect solutions, we know that with enough feedback from teachers and enough asking of big questions we will be able to put forward our “best bad solution”.

Resources

Berger, W. (2014). A more beautiful question: the power of inquiry to spark breakthrough ideas. New York: Bloomsbury.

It has been a challenging and wonderful experience working with my three colleagues, Erin, Courtney, and Jaymie, trying to find a solution to the wicked problem of the achievement gap. Here is our a video that describes the problem, our journey, and our proposed solution.

It is hard to believe that our time has come to an end, but it has. My course on assessment is closing, and I have been asked to reflect on the experience and the evolution of my thoughts on assessment.

To begin, I reviewed the three things I wrote I believed about assessment at the beginning of the semester.

Looking back on these, I still largely agree with the premise of the three although a number of details have been filled in. Additionally, this course has given me a number of new tools and pathways for inquiry into my use of assessment.

If we define the desired outcome of education to be the acquisition of a certain understanding or skill it is only through assessment that we can know that it has been learned. As a jazz student at the University of New Orleans, I was fortunate enough to study with the great Ellis Marsalis. Prof. Marsalis and the other faculty at the school had a mantra that was a question, “but can he play?” Sure, the student is doing the work and coming to class, but when they get up on the bandstand can they actually do what we think they can.

This is an essential function of assessment, can you do what you think you can do? The problem is that this sort of outcome-based summative assessment is often seen as the only type of assessment there is. Additionally, the pressure to perform on these high stakes assessments has led many student and teachers for that matter to view assessment as the measure of a fixed ability that either reflects success or failure. Throughout the course we have been presented with a variety of assessment types: formative assessment, summative assessment, assessment of learning, assessment for learning, assessment as learning.

In a way, all learning is a form of assessment. You gain information, you see if you can apply it, then you try again. It is this loop of learning, testing, feedback, reevaluation that allows all creatures to learn in their environments. Sadly, we have learned to have contempt for the testing and feedback portion of this loop, and as a result, we have created schools and students that are afraid to take chances and afraid to get things wrong. In a way, we are creating people who are afraid to learn. Assessment is not to be feared or tolerated, it is to be appreciated as an essential (arguably the central) component in learning.

One of my favorite passages in Alice and Wonderland goes as follows:

Would you tell me, please, which way I ought to go from here?’

‘That depends a good deal on where you want to get to,’ said the Cat

‘I don’t much care where—’ said Alice.

‘Then it doesn’t matter which way you go,’ said the Cat. (Carroll, 2008)

This quote says so much about the value of assessment. Assessment should point to where we are going and test where we have been. In the diverse classrooms in which we teach today, students are diverse in terms of experience, ability, and background. This creates unique challenges and opportunities. We have so many tools to identify the specific areas in which a student is lacking understanding, and there is no dearth of resources available for helping students learn. The difficulty is putting it all together. Teachers need quality data about student performance and a clear idea of how to get their students the help that they need.

A particular challenge that goes along with this view of assessment is student motivation. In the words of Dr. Lorrie Shepard (2000) “teachers need help in fending off the distorting and de-motivating effects of external assessments” (p.7). But here assessment can serve as an affordance rather than a constraint. When students are invested in their learning and have a clear sense of where they are and where they are going, they can be more autonomous learners. For example, it has been found that digital portfolios, when teachers are comfortable with the technology being used and they employ assessment for learning strategies, are a very effective means of gauging student progress (Barrett, 2007).

Feedback is an essential element in knowing that you are on the right track. We have all had the experience of trying to give directions to someone and saying something like, “if you get to the red barn with the dog barking in front of it, you went too far.” It is feedback to that tells us how we are doing on the path, and having quick actionable feedback is essential to a quality learning experience. As we read in Van den Bergh, Ros, & Beijaard (2013), feedback given during active learning is most effective.

As teachers in the 21st century, we have tools that most generations could never have dreamed of. Additionally, the requirements for our students–their abilities to confidently navigate various technological and social settings, their ability to creatively solve problems, and their need to be self-motivated and independent–is unique to our age as well. Here technology offers wonderful opportunities to meet these needs, but it will require a fundamental reexamination of learning and teaching.

We are all familiar with the importance of differentiated learning. Technology can greatly facilitate this. Whether it is using technology to create a comprehensive reading assessment that lets teachers know both their student’s reading skills and habits, as I tried to do in or the very rich data that can be collected from standardized tests, teachers can gather an incredible amount of data and link it to useful resources that are more tailored to the students needs. Not only does this help students learn better, having students work on material that is appropriate and relevant to them can help in classroom management and recruitment of students’ intrinsic motivations.

But in order to do this, it has to feel authentic to the learner., it has to feel like it is theirs and it has to engage them creatively. If we want students to not feel learning is a stick they are being measured against, we need to craft learning and assessment scenarios that feel more like games than physical exams. Games offer a unique opportunity to explore this. I was recently invited to play the card game Smash Up with my two sons. The volume of complex information that they were processing at lightning quick speed was astonishing. Now how do we get that type of learning and mastery of the concepts we are teaching in school? Games provide players the opportunity to in active learning that has them: “experiencing the world in new ways, forming new affiliations, and preparing for future learning” (Gee, 2003, p. 24). And they will do all of this, even when it requires great effort. I would like to be able to blend this sort of learning with assessment.

When making the game Escape from the Divisor, I tried to combine these two elements. Using Twine, I took the user through the concept of dividing fractions, checking to see that they had a working understanding of how to divide fractions. As we saw in the work of Randy Bennett (2011) in Module 2, there is an interplay between formative and summative assessment. Throughout the game, I tried to incorporate mini-lessons throughout the game for students that either missed questions or did not feel confident answering them. To get the key and escape from the divisor, a student needed to pass all of the modules, but they had opportunities to learn and get feedback throughout the game.

Another interesting tool for differentiation available in this setting is crafting distractors with common misconceptions that can be used as teachable moments for students. In this way, the assessment becomes an assessment that is also teaching the student as they take it. While this idea of assessment as learning may sound a little heady. It is, in fact, how we learn to do just about everything. When you try to cook an omelet, you are taking an assessment. You take what you think you know, apply it, get feedback (taste, texture, etc) and then reevaluate your knowledge. The more precisely you can identify the specific knowledge that you are lacking (the flavor is not good, I can’t flip it over in time) the better chance you will have to master the skill next time.

It is through this process that the student develops a sense of mastery and autonomy. They begin to understand the essential principles that underlie a particular skill or concept. In the omelet example, you need to learn about cooking temperature, different cooking surfaces, how heat affects egg texture, ratios for salt and other seasonings. Once you know how to make a good omelet these same principles can be applied in a new scenario, making scrambled eggs. While all of the parameters are similar the values of those parameters (especially heat) are very different in this new scenario.

While trends come and go in education, there is an underlying theme to so much of 21st-century learning “active learning works best. Telling doesn’t work very well. Doing is the secret. Active student engagement is necessary, and one of the best ways to get it is to use stories that catch students’ interest and emotion” Herreid & Schiller (2013 p. 65). Technology affords us the opportunity to invent these types of learning scenarios. But it takes a great deal of work, creativity, and most importantly, the willingness to let go of some preconceived ideas of what “good” education is. For example, it is good to get a question wrong, that is how you learn. It is good for students to be working at their own pace, guided by their own interest. It is good for the teacher to not know all the answers, but rather be a facilitator in the student’s own construction of knowledge.

One way to begin this process was to create the assessment design checklist, which I have referred to frequently throughout the course. The checklist provides me with clear benchmarks for creating the types of assessment that I want to create. I find that whenever I work with the checklist I find oversights to address and new things to add. For example, in the reading assessment, my assessment design checklist suggested that I need to focus “on the most relevant skills and big ideas in the topic”. This prompted me to make a whole new section to my reading assessment focused on decoding and comprehension. And while working on this my design checklist encouraged me to promote student autonomy which led to the addition of the username and avatar to the application.

My ideas about assessment have changed greatly through this course. Not only do I now see assessment as a central element in the learning process, rather than a snapshot taken from outside of that process, I now see assessment as needing to be creative and constructive. Not just constructive in the typical constructive criticism way, but more importantly, constructive meaning that it facilitates the students’ construction of meaning by drawing on the students’ backgrounds and interests and gives them space to explore, get feedback, and adjust. Assessment is essential in this process. “From a sociocultural perspective, formative assessment — like scaffolding — is a collaborative process and involves negotiation of meaning between teacher and learner about expectations and how best to improve performance” (Shepard 2005). While the outcomes of my three things I believe about assessment seem to be similar, when comparing this list with my initial list I am struck by the fact that my first list is about what assessment should be. It sees assessment as a narrow component of instruction that can be perfected. My new ideas about assessment center on what assessment can do, and the essential role it plays in creating rich learning experiences for students. Before I was trying to find a better assessment, now I am thinking how assessment can better learning. This is a very different view of assessment, I look forward to exploring and expanding throughout my life as a teacher and scholar.

Barrett, H. (2007). Researching Electronic Portfolios and Learner Engagement: The Reflect Initiative. Journal of Adolescent & Adult Literacy, 50(6), 436-449. Retrieved from http://www.jstor.org.proxy1.cl.msu.edu/stable/40015496

Bennett, R. E. (2011). Formative assessment: A critical review. Assessment in Education: Principles, Policy & Practice, 18(1), 5-25. doi: 10.1080/0969594X.2010.513678

Carroll, Lewis (2008) Project Gutenberg’s Alice’s Adventures in Wonderland, retrieved from http://www.gutenberg.org/files/11/11-h/11-h.htm

Gee, J. P. (2003). What video games have to teach us about learning and literacy. New York, NY: Palgrave Macmillan.

Herreid, C. F., & Schiller, N. A. (2013). Case studies and the flipped classroom. Journal of College Science Teaching, 42(5), 62-66. [PDF]

Shepard, L. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4-14

Shepard, L. (2005). Linking formative assessment to scaffolding. Educational Leadership, 63(3), 66-70..

van den Berghe, L., Ros, A., & Beijaard, D. (2013). Teacher feedback during active learning: Current practices in primary schools. British Journal of Educational Psychology, 83, 341-362. doi:10.1111/j.2044-8279.2012.02073.x [PDF]

This summer, I have been taking a long hard look at assessment. While as students (and now teachers) we tend to few assessments as a “necessary evil”, this summer has changed my thinking on this. You can read more about the evolution of my views on assessment in this post. Throughout my reexamination of assessment, I have been thinking a lot about one that I have given hundreds of times: a reading benchmark assessment. The purpose of this assessment is to give teachers a baseline reading level for incoming students so that they can assign appropriate books, for reading groups based on ability, and identify students who may be in need of intervention services.

While this data is incredibly useful, the current method for obtaining it is very labor intensive, relying on one-to-one oral reading assessments. This means that a classroom teacher, or in my case, an intervention teacher has to sit and keep a running tab of what the student is reading. This process can take 15-20 minutes per student. For more on these types of reading assessments, read my earlier post.

In addition to high time costs of such assessments, there is even some question as to whether reading aloud is an effective measure of reading skill. Specifically, this type of assessment, with its emphasis on oral fluency, seriously disadvantages non-native English speakers and students with speech challenges. Additionally, the standardized reading tests that students (and teachers and schools) are evaluated with do not include read aloud questions. Not to mention that the current benchmark assessment does not even attempt to draw on students interests, environment, and intrinsic motivation. I set out to create a set of assessments that could effectively and efficiently give teachers a student’s reading level, and could also give the student an opportunity to share their feelings about reading with their teacher.

The assessment that I came up with has two major sections:

The two sections are separated and need not be given at the same time.

The Reading Lab is an application that can run on Mac, Windows, or Linux operating systems.

It features two types of reading assessment: word decoding and a maze reader comprehension test.

When the application opens, students sign in and select an avatar (icon).

These two elements are put together to form an ID badge that stays at the top of the screen throughout the assessment.

I thought this was an important feature to include since it would allow students to personalize their assessment and really feel like it was just for them.

The decoding section is designed to identify the students instructional reading level. This is the level at which the student can read and comprehend text with help. To find the instructional level students are taken through a progressive series of reading levels. The student continues to advance until they score lower than 90% on a given level.

The decoding questions can be presented in three different ways:

Stem Audio – a word is read to the user and the user must choose the correct word from four options. Note: in the example, the spelling ‘twurl’ is incorrect but is phonically reasonable, as a result, if a user chooses this option they will receive partial credit.

Option Audio – A word is printed at the top of the screen. The user then listens to four audio options and clicks the one that matches the printed word.

Option Audio – A word is printed at the top of the screen. The user then listens to four audio options and clicks the one that matches the printed word.

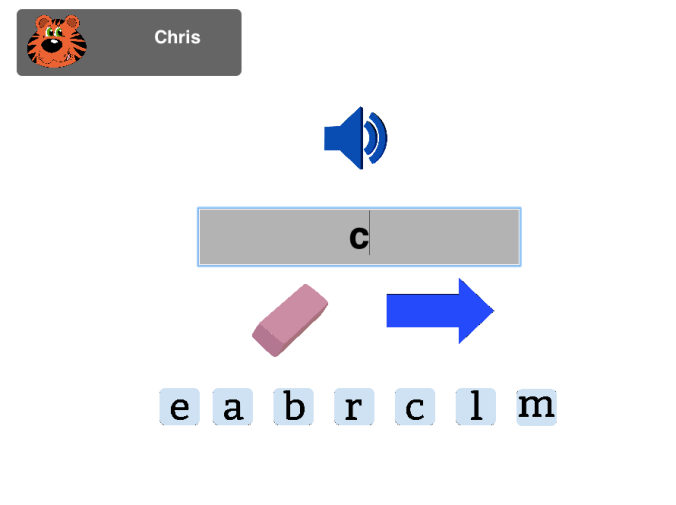

Stem Audio with Spelling – A word is presented aurally for the user. The user then uses letter tiles to spell the word in a field that is provided.

Stem Audio with Spelling – A word is presented aurally for the user. The user then uses letter tiles to spell the word in a field that is provided.

The decoding section features twelve words per reading level. If the user scores a 90% or better on the twelve words they are passed up to the next level. So if a user was working in level E and passed with a 95.8%, they would be moved to level F. If the score is lower than 90% their score is recorded. This score and the reading level will be shared with the teacher to indicate the student’s instructional reading level. The student then proceeds to the maze reader comprehension section.

A maze reader comprehension assessment consists of a passage of text in which one in seven words is left blank and students must choose three potential options to complete the text. The assumption is that “capable readers understand the syntax of what they read and the meanings of the words as they are used in the text. Some students with reading difficulties can’t comprehend what they read well enough to choose words based on semantic and syntactic accuracy” (Milone 2008, p. 150). In this way, the maze reader can give the teacher a clear indication that the student is in fact able to read-to-self for comprehension at the given level. This is an important point, since often students can decode words at a higher level than they can effectively comprehend text. Here is how the maze reader comprehension assessment appears in The Reading Lab.

An introductory example is provided with audio instructions. The user is introduced to any characters with proper nouns that will be in the passage (in this case Mia).

They are then given a practice example where they are asked to complete the passage “Hi. My [name] is <the given users name>.” By using the user’s name, a more personalized experience is created.

Once the user has completed the introductory example, they then begin the assessment.

The passage runs 150-200 words depending on the level. After the first sentence, there is a word to fill in approximately every seven words.

Upon completion, the user’s score is calculated, If the user scores 95% or better, it is safe to assume that this is a suitable read-to-self level. The level of maze reader that the student is assessed with is taken from the users decoding level. In the example of the user who passed the level E assessment, but only scored an 85.8% on the level F assessment, the user would be given the level E maze reader in order to confirm that they had sufficient comprehension at their read-to-self level.

The final screen of the application shows students and teachers the students results. This screen can be password protected so that a password must be entered in order to see the results. This is done so that the teacher can discuss the results with the student, this should not be used to conceal the results from the student. It is important that students be recruited into the process of developing their reading, and I firmly believe that giving students clear feedback on their performance is an essential part of fostering student autonomy and intrinsic motivation.

Results are presented as the highest decoding level passed, highest decoding level attempted, percentage correct at highest level attempted, maze reader attempted, and percentage correct on maze reader. This highest level attempted in decoding represents the students instructional level. This can be used for the student’s individual goal setting and to inform the teacher of the level of reading skills that are reasonable to expect from this student at this time. The maze reader data can be used to help students select books that are at a level which the student can comprehend. This information can also be used by the teacher for assigning reading materials and grouping students of like ability into reading groups. While the Reading Lab assessment is not robust enough to be used as a comprehensive form of intervention assessment, it serves as a useful indicator of students who may need additional diagnostic and intervention services.

The feedback provided easily answers the three questions as articulated by Hattie & Timperley (2007)

Where am I going? The assessment gives the instructional level as well as the read-to-self level

How am I going? How far is the student from having their instructional level betheir read-to-self level?

Where to next? By going over this data with the teacher, the teacher is able to recommend a regime of just right books that will push the reader to the next level.

While the goal of the first prong of the assessment was to help students get reading material that was at the right level for them, the goal of the second prong is to get to know about them as readers and get them excited about reading in the upcoming school year.

For this assessment, the student works in a Google slide presentation that they share with their teacher. Instructions on how to copy the assessment and work in it are provided in this Youtube video.

By using a Google slideshow, students have a fun and interactive way to create their own reading profile and share it with their families, friends, and teachers. Also, this file can be saved and updated throughout the year and serve as a reflective journal of their habits and attitudes toward reading.

The assessment asks students to think about and articulate the following:

In addition to getting valuable information on the types of books that could hook the student, the teacher gets some exposure to the reading community that is around the student. This type of information can be very valuable for the teacher. Is there a family member that the student likes sharing books with? Does the student have negative associations towards reading? Does the student have habits that will support their growth in reading? All of this information can be very useful in crafting a pro-reading classroom that promotes student autonomy and accountability.

While the information gathered will be useful to the teacher in crafting future instruction, the assessment also helps set the stage for the student. It begins to orient them towards important ideas around reading, for example, confronting challenging words, self-evaluating comprehension, and thinking about the social and emotional aspects of their lives as readers. In this way, the assessment addresses what James Paul Gee in his book What Video Games Have to Teach us about Learning and Literacy (2003) terms the external grammar of reading. While the reading lab assessment looks at the internal grammar of reading (how well the student performs the acts of decoding and comprehension), the How I Feel about Reading assessment considers the external grammar of reading, how readers think and live and how the students can construct their own lives as readers.

Upon completion of the two prongs of this assessment the teacher and student will have a clear idea of the following:

In this way, the assessment provides far more information than a benchmark assessment, using decidedly fewer hours of the teacher’s time. The assessment allows the teacher to collect data essential for helping the students reading growth in a way that is not overly taxing for the student or the teacher. Additionally, the personalization of the assessment allows students to play an active role in their assessment as readers, promoting autonomy and accountability.

When evaluating this assessment, I compared it to Nicol & Macfarlane-Dick’s (2006) seven principles of good feedback (p. 205):

Clarifies what good performance is (goals, criteria, expected standards): This is achieved through the Reading Lab. It gives clear performance indicators in terms of decoding and comprehension

Facilitates the development of self-assessment (reflection) in learning: This is achieved through the How I Feel about Reading assessment. Students are encouraged to reflect on their own feelings and habits as readers and are required to articulate their own personal reading goals.

Delivers high quality information to students about their learning: The assessment is founded on sound principles for assessing decoding, comprehension, and students background in reading.

Encourages teacher and peer dialogue around learning: The ability to share and present the Google slides provides many opportunities for dialog between teachers, students, and families. The data from the Reading Lab can be used to help teachers create ability-based peer groups that ensure students are working to the best of their ability in groups of students who are confronting the same challenges.

Encourages positive motivational beliefs and self-esteem: The assessments are fun, non-judgemental, and personalized. They should make students feel comfortable.

Provides opportunities to close the gap between the learning goal and the students’ current performance: The flexibility built into the Reading Lab, means that students can grow with the assessment over time. The saved Google Slides allow student to revisit and revise their thinking about assessment.

Provides information to teachers that can be used to shape teaching: The information that teachers get from these assessments can be used by teachers to:

The two videos below will give you an overview of the two assessments. The first video is a virtual tour of the Reading Lab and the second is the introduction to the How I Feel About Reading Assessment.

If you would like to try out the assessments links are provided below.

GET THE APP

Note: the Reading Lab is a download application that is compatible with Mac, Windows, and Linux. Feel free to download the appropriate file.

Resources

Gee, J. P. (2003). What video games have to teach us about learning and literacy. New York, NY: Palgrave Macmillan.

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. [PDF]

Milone, M. (2008) Core Reading Maze Comprehension Test; Academic Therapy Publications retrieved from https://www.nsbsd.org/cms/lib01/AK01001879/Centricity/Domain/41/CORE%20MAZE.pdf

Nicol, D., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. [PDF]

The images used in the application are cited in the resources link in the application. You can view it here.

This week, I was asked to review one of my colleague’s Twine assessments. Having made my own Twine assessment last week, I am familiar with the constraints and affordances of Twine. I was interested to review Peter Kim’s Game- Based Assessment: Super Berry Hunt.

The semiotic domain of the assessment was zoology, specifically the differences between predators and prey. To survive one needs to avoid predators and ask various non-predators (prey) how to find the Super Berries. The game tests students’ abilities to differentiate between predators and prey (internal grammar). It also demands that they show perseverance and navigate the numerous twists and turns of the game (external grammar).

I found the game challenging; I went through a few times and it took me a minute to remember that the orange berries where the ones that wouldn’t kill me.

Here is how the game compares to my Assessment Design Checklist:

Does this assessment explicitly address the most relevant skills and big ideas in the topic of the assessment and the discipline being taught in the course?

I think that it does. It is my understanding that the goal of the game is to think about predators and prey. Throughout the game, I need to identify whether an animal that I encounter is predator or prey. The one thing that I think could be improved here, is some mention of the salient traits I may notice that indicate a predator. A simple statement like: “the shark smiles and you see its giant teeth. You realize that you are looking at a flesh-tearing, meat-eating predator!” could help this assessment do a little more in terms of the primary objective.

I also feel that while I did need to identify whether an animal was predator or prey, the way information was gathered from the animals on where the berries were and which berries were edible, was not particularly tied to any learning goal. It would be great if the game could more closely tie what proved to be the biggest challenge of the game to the outcome objective.

Does this assessment provide the teacher with actionable information about the student’s background and expectations for the topic?

The assessment has feedback built into it (if you get something wrong you are redirected and are able to try to correct the mistake). I wonder if there is a way to get more information on how the student is performing and also to get some information from them on their knowledge and misconceptions.

Does this assessment prime the student for learning within the discipline and promote student autonomy and accountability?

I really did get the sense that it is my responsibility (as a student) to learn from the game. I also felt like I had a great deal of freedom. I do like that the game let students choose their animal, but I was a little disappointed that it didn’t reference it later. It would be a nice touch to store the student’s selection as a variable and refer to it from time to time.

Does this assessment provide the student with clear feedback that is informative and actionable?

This assessment definitely did provide clear feedback that was informative and actionable. I particularly liked the option to go to the safe house and start over. I also like the Youtube video on predator or prey if I picked “promise” in the beginning. I feel there could be more of this kind of teaching in the assessment.

Does this assessment provide the student with clear feedback that is informative and actionable?

Does this assessment address the primary skills being assessed and take into account the various learning styles, abilities, and disabilities of the students being assessed?

For the record, I think that this is challenging in Twine. I will say that including images as well as the aforementioned video could be very helpful for ELL students. Since the author specifically mentions ESL student in the blog, I will mention that adding some more audio could be quite helpful.

I did not find any noticeable glitches with one exception: links for left and right path with lion seemed mixed up.

One thing I found particularly interesting in the game was the way in which within the game animals are able to talk, but in the broader context of science, they are not able to speak using human language. While this could be seen as a constraint of gamified assessment, I actually see it as a wonderful affordance…a whimsical way to make a specific topic in the real world more engaging while not being confined by reality.

As discussed in my last post, I am creating a gamified assessment in Twine. The semiotic domain I focused on division of fractions. I chose this domain because it is a challenging topic to teach. What is particularly interesting is the interplay between the internal and external grammar of the domain. While math educators are pushing to include more understanding in the instruction of mathematics, dividing by fractions is a particularly difficult topic to understand. In my experience, most teachers abandon trying to really explain why the students should do a particular algorithm of multiplying by the inverse to find the sections within what is being divided and opt instead for a rote memorization of Keep, Change, Flip. This rote memorization works but it does not promote understanding. So the algorithm satisfies the internal grammar (the procedure used to get the right answer) but leaves them with little understanding of actual mathematics (the underlying reasoning of the procedure).

I designed my Twine to be a formative assessment as learning. The premise of the game is that the player has been trapped in a locked room by the Great Divisor. There is only one door, and it is sealed with three locks. In order to escape, the player has to find three different colored keys. The three keys are inside of boxes that will only open if the player solves the problems that are on the boxes. The three keys represent the three foundational steps in learning to divide fractions:

Each box has a minimum of four problems that get progressively harder. Fortunately, the player is not alone; they have their trusted mouse, Denny, who is very good and math and can communicate telepathically. Unfortunately, he cannot tell the player the answers, but if the player misses a problem, Denny can offer mini-lessons on how to solve that type of problem. The player will then be prompted to do a few practice problems before going on to try to solve the box problems again.

The player may try the door at any time, but if they do not have the keys, it will not open. Additionally, the player may go through the boxes in any order, but the blue lock must be unlocked first. I did this so that players could have a sense of freedom but still be lead down a linear path.

On most questions, players have the option of asking Denny to explain one or more concept. (At one point he even convinces the player to not eat potentially poisoned food!). And if the player misses a question, Denny is there to offer some advice. After a few practice problems, the player is back to trying to solve the box problems. This practice was inspired by something I read from James Paul Gee (2008) “The game encourages him to think of himself as an active problem solver, one who persists in trying to solve problems even after making mistakes, one who, in fact, does not see mistakes as errors but as opportunities for reflection and learning (p.36)”.

I tried to balance the internal and external grammar issue by first having the blue box section where students are tested on the key terms for the unit. This will ensure that they will be able to process later instruction at a deeper level. Additionally, the mini-lessons always begin with an explanation of the why (external grammar) before we get into the process of how to solve the problem. This is an important point as we want to teach for understanding (internal grammar). As an example, I have linked a video from the Twine where Denny explains how to deal with whole numbers when dividing fractions. While there is a temptation to say, “just put it over one” (procedure), for most students this seems totally arbitrary. In the video, Denny begins by demonstrating why 3 is the same as 3/1(the underlying reasoning) and then goes on to review the how to divide 3 by ⅖ (procedure).

Earlier this summer, I made an assessment design checklist. Here is how I think my gamified assessment measures up against the list.

I do think that this assessment meets this standard. The big ideas in this topic are knowing the terms for parts of a fraction, understanding how to multiply fractions, and understanding that dividing fractions is the same as multiplying the dividend fraction by the inverse of the divisor. While much of this is expressed in terms of internal grammar–with the amorphous word “understanding” standing in for the external grammar–I do feel that the game makes a good attempt at teaching the underlying principles.

On this count, I feel Twine falls a little short. The teacher can gather a lot of information from walking the room, but there does not appear to be any collection of data. There is not an easy way to build in recorded student feedback. I linked a survey at the end, in hopes of gathering some of this data, as well as collecting bugs.

There are a few features added to the story to allow the player a more customized experience, including the use of their name throughout the game as well as a reference to their favorite food.

I do think that it does prime students for learning. Not only does it cover the “must-have” concepts for dividing fractions, it has them work through some problems with a fair amount of guidance in a fun way.

This is what I really like about Twine. You can direct students through the experience based on their responses. There were a few times where one answer option was an un-simplified fraction. While technically right, the structure of Twine, allows me to refer them back to the question to simplify the answer. It also allows me to set up common mistakes as distractors and address them directly.

This is another point where the game might be a little limited by Twine. Since it runs on internet browsers, we can assume that the users with disabilities will have some tools that they are used to using for web browsing at their disposal. But beyond this, accessibility is an issue with the presentation. Also, given the text-driven nature of this assignment means that it will present a challenge to ELL players.

It has been a fun experience to build an assessment in Twine. There are a number of features that make it useful for creating unique learning experiences. A few things that I really liked were the ability to use CSS to style the presentation, although I feel like I could have done a lot more to make an eye-popping presentation. The ability to embed content with HTML, and the ability to use variables and conditional selection to create game situations allowed for an interesting game. In the case of my game, a variable is assigned to each key. When the player goes to the door, they are asked to try each key, if the color key variable is true, the door opens, if not they are told to keep searching the room. This feature allows the creator to make for more engaging user experiences. I am really intrigued by how to make it more personable, as well as finding other ways to track user performance throughout the game.

To try the game yourself and let me know what you think!

Please note: the game is hosted on a different server than this post.

Gee, J. P. (2008). What video games have to teach us about learning and literacy. Basingstoke: Palgrave Macmillan.

You are a prisoner in the dungeon of an evil genius.

Do you have the intelligence, the strength, and the perseverance to escape?

The challenge has been issued, will you meet the call?

Yes… Yes, I will

For our next assessment we have been asked to create an assessment using Twine.

I am excited about this for a few reasons.

First, I love the idea of gamified assessment. In fact, just last night I was having a conversation with my school-aged kids, and they told me school would be much better if “our tests and homework was more like Assassin’s Creed”. It has been my experience, that the same kids who appear to have little grit when it comes to learning a math concept can also be the ones who will stay up all night trying to get slay a boss in their favorite game. It all reminded me of this video with Paul Gee.

Second, I am very interested in working with Twine. The interface for the teacher seems very logical, and I like that you can use HTML to customize it. While Twine will demand a fairly linear narrative and it appears that the questions always break-down to some sort of objective choice, I still think that I can find some interesting ways to lead a student through a learning/assessment experience.

For my assessment, I am focusing on middle-school math. Specifically, I am focusing on the skill of dividing fractions. One reason I find this an interesting study is because of the interaction between the internal and external grammar of the topic. On a conceptual level, dividing by fractions can be a bit opaque. While it is easy to teach students to conceive of dividing with whole numbers by drawing circles and counting up tally marks (one for you, one for me, when you divide by two) what does it mean to divide something by less than one? Why is the quotient bigger than the dividend?

Often, students are taught workarounds. For example, the ever popular KCF (sometimes also taught as KFC-like the yummy chicken place). This method has become so popular there are even songs dedicated to it like the one below:

While it is an effective algorithm, there is something that has always bothered me about KCF (KFC), and that is that kids apply it without really understanding why. It seems to me to reinforce the idea that math (especially math after 3rd grade) is a collection of secret handshakes and complex procedures that don’t relate to reality. So while KCF satisfies the internal grammar of mathematics (it gives you the right answers) it doesn’t help kids actually learn how to do mathematics (external grammar). Likewise, it reinforces a common attitude amongst the peer group that views math as esoteric and unrelatable.

My plan is to make an escape room:

The Grand Divisor has locked you in his study.

In order to escape, you must decipher his devious puzzles and enter the 8-digit code that unlocks the room.

You will explore the room and are given questions along the way, the answers to these questions make up the numbers of the code.

When you successfully enter the 8-digit code, you will escape.

The Grand Divisor did not realize that your super-intelligent rat,

Denny, is with you. If you get stuck or make a mistake, he will use his magical-mathematical telepathy to teach you what you need to know.

While Denny has incredible mathematical skill, he will not give you answers.

Your problems are yours alone; only you can solve them.

This feature of the game will allow me to cycle students through mini-lessons if there is an idea that they do not understand. If a student misses a question, Twine can be used to branch them into an instructional track before looping them back to the problem to allow them to try it again.

While Denny will teach KCF to help students solve a problem, some of the questions and mini-lessons will focus on the underlying principles of fractions and dividing them, so that students will have a deeper understanding of why we KCF.

This assessment aligns well with my assessment design checklist, but there are a couple of areas that I am concerned with. The assessment is fairly cut-and-dry in terms of the content. The answers always end up being the selection of one “right” answer. While this is ok, I would prefer something that is a little more flexible. I read that variables can be used in Twine, but I need to explore more how they could be used to create a more personalized experience. Also, I do not know what sort of accessibility features Twine has so this could create some problems.

I am really looking forward to building this assessment and getting to know Twine!

DMLResearchHub. (2011, August 4). Games and Education Scholar James Paul Gee on Video Games, Learning, and Literacy. [Youtube video]. Retrieved from https://www.youtube.com/watch?v=LNfPdaKYOPI&t=2s

Mobile Learning Center. (2015, November 25). Watch Me Flip Dividing Fractions Song.[Youtube video]. Retrieved from https://www.youtube.com/watch?v=8Tv7WunDsLg

This week, I experimented with creating an assessment (actually, assessments) in Google Classroom. As discussed last week, I was interested in exploring how Google’s free Learning Management System (LMS) compared to some of the more costly LMSs like Blackboard. I was particularly interested in how Google Classroom could be used to create assessments and how easily teachers could get rich data on their students’ performance on an assessment.

Google Classroom content can be linked directly from your Google Drive. So if a teacher is using google docs for anything in their are teaching, they can upload the content right to their classroom. This can really streamline the preparation process for teachers. Additionally, since the files are linked through the Google Drive, they are updated immediately, meaning that any changes the teacher makes to documents on their side will automatically update on the student side.

Assessments can be created with a variety of question types (multiple choice, short answer, essay, etc.). Different point values can be assigned to different questions and results can be released to the student immediately or held by the instructor. The teacher is provided with very rich data on the quiz, including which questions students missed and. where applicable, which answer the students gave.

One feature that I found interesting was the section feature. This allows you to create different sections in the assessment. Each section is presented in its own window. You can then direct the student through the test based on their answers. So for example, in the assessment on 3-digit subtraction, if a student is unable to select the correct vertical arrangement of a problem presented horizontally, there is little benefit in having them try to solve the problem and the assessment can move on to the next big concept. This allows teachers to create some-what adaptive assessments that can stretch their higher-performing students without stressing their lower-performing students.

Another interesting feature was the feedback. While I was disappointed to see that the teacher cannot provide unique feedback for each distractor, Google Classroom provides the opportunity for teachers to embed images, documents, and YouTube videos into the failure feedback. This is a very powerful tool that will allow for individualized instruction, as I demonstrate in the screencast below.

One of the things that I like about using Google Tools in the classroom is that there are a number of accessibility features in Google Applications and service providers and students tend to be aware of them. For example, Chromebooks have a quality, easy-to-use text to speech feature. Additionally, a plug-in like Texthelp Read&Write is compatible with documents created in Google.

I created two types of assessment for the exploration. Both of them involving 3-digit subtraction. To start, I thought through the different skills needed to successfully perform 3-digit subtraction.

In creating the distractors, I purposely included common errors that will give the teacher information on students’ understanding and gaps in understanding. These include:

The two assessments are as follows:

This is an assessment for learning that can be given before the unit is taught. It presents questions that address the key concepts and misconceptions in the unit. It is presented in an adaptive way, so that students who have little pre-knowledge will be able to quickly move through the assessment without being demoralized. The assessment ends with a question that allows students to discuss a time when they use subtraction in their daily lives. The teacher can use students responses to this quick quiz to evaluate where students are and decide how to tailor their instruction for the upcoming unit. It also gives students a sense of the skills needed to successfully subtract 3-digit numbers and encourages them to make personal connections with the material that will be covered in the unit.

This is an assessment as learning. In this short assessment on place value, I tried to develop a module that could be used in a hybrid or flipped-classroom scenario. The assessment begins with a video reviewing place value. Students check that they have watched the video and then are given a variety of questions dealing with issues specific to identifying place value. Upon submission, the quiz is immediately graded. Students are able to see what they got right and wrong, additionally, the teacher can link additional material or videos to specific questions so that students can target the concept that they missed. In the case of this example, I made a quick video called “dealing with zero” that appears in the feedback section if the student misses the question with a 0.

In a previous post, I presented a formative assessment checklist. I went ahead and evaluated this mini unit of 3-digit subtraction against the criteria of that list. Here is what I found:

Does this assessment explicitly address the most relevant skills and big ideas in the topic of the assessment and the discipline being taught in the course?

Yes, I purposely included questions which would test the key threshold points in understanding the concepts: place value, vertical arrangement, regrouping, etc. and included distractors that consisted of the most commonly made errors.

Does this assessment provide the teacher with actionable information about the student’s background and expectations for the topic?

Yes. The pre-screening gives the teacher a great deal of information on where the students currently are on the topic, and both assessments give the teacher rich data on where students are, what they are getting, and what needs work. They also give the instructor some interesting avenues into exploring math in students’ everyday lives.

Does this assessment prime the student for learning within the discipline and promote student autonomy and accountability?

This is one area that can be improved. I could see where these assessments are part of a large learning path that students embark on. By mastering a topic they progress farther along the path.

Does this assessment provide the student with clear feedback that is informative and actionable?

I think this is certainly true of the second assessment. I really like having the feedback presented to the student and being able to link relevant documents and videos to the feedback.

Does this assessment address the primary skills being assessed and take into account the various learning styles, abilities, and disabilities of the students being assessed?

Yes, given the many accessibility tools that Google provides, this should serve students needing accommodations. The one thing I could see improving in this area is getting more into students individual, creative sides. This is why I think it would be great to pair this type of module with in-class problem-solving activities.

I was very pleased with the flexibility and ease-of-use with Google Classroom. It is not the most attractive user experience and currently there are few options available to customize the theme colors, etc. But the tools that teachers have in Google Classroom are quite powerful and they sync well with other Google tools like Google Documents and YouTube. While large institutions are able to pay for robust LMSs like Blackboard, Canvas, and D2L, Google Classroom appears to provide teachers at more modest institutions a very powerful LMS that is free and relatively easy to use.

For a quick run-down of the assessment I created and my thoughts on Google Classroom, check out the video below.

As we begin to examine Learning Management Systems (LMSs), I decided to start with the one I know best, Blackboard. I first met Blackboard as an undergrad at the University of New Orleans. It was the first LMS I had ever worked with, just for reference this was pre-smart phones and at the time I had a Hotmail account. Technology being what is was at the time Blackboard was clunky, buggy, and did not have a rich user experience (UX). My first round of graduate school, I went to the New England Conservatory of Music, and they really didn’t implement any sort of LMS. So imagine my surprise when I got my first adjunct teaching job and the university I was working at was using Blackboard–pretty much the same clunky, buggy, dull UX, Blackboard of my younger days.

Why was this still such a popular LMS when there are so many great, specialized online tools available now? Because using a variety of tools may mean that “learners are likely to be overwhelmed by the many types of interface that they have to handle, and the learning experience will likely be frustrating (Woo, 2013, p.37 )”. Blackboard puts a number of tools for students and instructors all in one place. What are some of these things Blackboard can do: integrate email, support a wide variety of content types (assignments, assessments, discussions, wikis etc.), sync with DropBox for easy assignment turn-in, provide a teacher grade book that can sync with assignments and assessments and can group and weight assignments according to the instructors needs, to name a few.

Here are the things that I have observed about Blackboard as I have used it:

After working with Blackboard for a few semesters, I found that the different tools in Blackboard were quite useful after some experiments and reiterations. Multiple choice assessments worked fine, but I did find it frustrating the sheer amount of time it took to create them. There is also the issue of academic integrity. If you intend to have a “closed-book” test, you will need to include the provision for a lockdown browser and a webcam to monitor the test. Some students find this intrusive. This being the case, I found that in in-person and hybrid class situations, where summative multiple choice tests were appropriate, scantrons were simply more efficient. Where multiple choice tests were very useful in Blackboard were assessment-as-learning situations. Automated quizzes in Blackboard can be given with multiple attempts allowed. The instructor can also set a cap on the number of attempts that the student can take. In this way, the instructor can give an assessment that tests or pushes student knowledge in a certain area that includes immediate feedback on what they got wrong. Then the student retries the exam. If they reach the maximum number of attempts, the quiz can be reopened after a meeting or correspondence with the instructor.

Surveys can be very useful tools in Blackboard. They can help an instructor gauge student understanding or preconceptions on a concept. Results of the survey are presented in an easy to interpret way. I found this very helpful to use in formative assessment. For example, if I wanted to know how well students understood our musical terms, I could put up a survey and see that 80% of the class correctly identified an example of polyphony, while 50% were still unsure of the difference between timbre and texture. I could also get candid feedback on their preconceived notions of particular types of music and cultures. Additionally, I could ask students to write a short essay where they discuss an example from class and then highlight the students’ responses. Best of all, all of this done with anonymity.

The discussion portion of Blackboard can be useful for discussions, but there were a few things that made it difficult. In addition to the perfunctory feeling that comes from these types of discussions, it was not easy to assign credit for participation, and the system of threads and subthreads left a lot of students lost and confused.

While there were useful tools in Blackboard, I did often find myself using outside tools. For example, shared Google Docs were just too useful to pass up. Having students be able to collaborate in a document in real time with the option to add comments as they work, was just too useful to ignore. Also, after a few attempts to have students do writing assignments in Blackboard, I abandon the concept in favor of using WordPress. Having an aggregated WordPress blog for the class allowed us to see each other’s work. I could use tags to create mini collections of different content that students could peruse to learn more about what they were interested in or stuck on. Finally, WordPress allowed for media including images, video, and audio.

From an instructor’s perspective, Blackboard can be a bit of a headache. The myriad of menus and tabs in Blackboard can be a bit confusing. I remember at the beginning of each semester knowing that a colleague of mine would come knocking on my office door asking how to open their class. It was certainly not intuitive, which seems strange for something as important as making your class available to your students.

Also, while Blackboard has a number of useful features, including some very important accessibility features, they are not easy to find, and the help menu isn’t much help. This isn’t a huge problem since most large institutions have someone who serves as a Blackboard administrator, but I do think that ease of use of a system will influence how thoughtfully and effectively instructors will use it.

Having worked with Blackboard as both an instructor and student over the course of more than a decade, I can say, the UX is not great, and it doesn’t seem to be getting better very rapidly.

Next week, I am interested in exploring how Google Classroom compares to Blackboard. While it seems to have less customizable features, it may be that being able to include the whole suite of Google tools will make Google Classroom a viable alternative that has the advantage of being free.

Resources

Woo, H. (2013). The Design of Online Learning Environments from the Perspective of Interaction. Educational Technology,53(6), 34-38. Retrieved from http://www.jstor.org.proxy1.cl.msu.edu/stable/44430215

Here is the latest version of my assessment design checklist.

A few things to note:

The big things that I have learned in the process of doing this project:

Here is a link to my Assessment Checklist 3.0, feel free to comment and let me know what you think.